MDS SMP Node Affinity Demonstration wiki version

Final Report for the Parallel Directory Operations Subproject on the Single Metadata Server Performance Improvements of the SFS-DEV-001 contract.

Revision History

| Date | Revision | Author |

| 04/11/12 | Original | R. Henwood |

Executive Summary

This document finalizes the activities undertaken during the Single Metadata Server Performance Improvements, Sub Project 1.2: Parallel Directory Operations within the OpenSFS Lustre Development contract SFS-DEV-001 signed 7/30/2011.

Notable milestones during the project include:

- Shared directory mknod maximum throughput increased 76% from 25000 to 44000 within the demonstration environment.

- Parallel Directory Operations was implemented with 2500 lines of code and was completed and landed on Lustre Master for inclusion into Lustre release 2.2 on March 30th 2012.

- All assets from the project have been attached to the public ticket LU-50.

Statement of work

Parallel Directory Operations allows multiple RPC service threads to operate on a single directory without contending on a single lock protecting the underlying directory in the ldiskfs file system. Single directory performance is one of the most critical use cases for HPC workloads as many applications create a separate output file for each task in a job, requiring hundreds of thousands of files to be created in a single directory within a short window of time. Currently, both filename lookup and file system-modifying operations such as create and unlink are protected with a single lock for the whole directory.

Parallel Directory Operations will implement a parallel locking mechanism for single ldiskfs directories, allowing multiple threads to do lookup, create, and unlink operations in parallel. In order to avoid performance bottlenecks for very large directories, as the directory size increases, the number of concurrent locks possible on a single directory will also increase.

Summary of scope

In scope

- Parallel Directory Operations code development will take place against WC-Lustre 2.x baseline.

- Modifications to ldiskfs to provide Parallel Directory Operations.

- Modifications to OSD to exploit Parallel Directory ldiskfs.

- New locking behavior documented in the WC-Lustre 2.x manual.

- Parallel Directory Operations supported on all Linux distributions that WC-Lustre supports.

Out of scope

- Only EXT4 back-end filesystem will be supported.

- As a rule, patches to EXT4 will not be prepared for up-stream inclusion.

The complete scope statement is available at http://wiki.whamcloud.com/display/opensfs/Parallel+Directory+Operations+Scope+Statement

Summary of Solution Architecture

Parallel Directory Operations (PDO) is concerned with a single component of the Lustre file system: ldiskfs. Ldiskfs uses a hashed-btree (htree) to organize and locate directory entries, which is protected by a single mutex lock. The single lock protection strategy is simple to understand and implement, but is also a performance bottleneck because directory operations must obtain and hold the lock for their duration.

The Parallel Directory Operations (PDO) project implements a new locking mechanism that ensures it is safe for multiple threads to concurrently search and/or modify directory entries in the htree. PDO means MDS and OSS service threads can process multiple create, lookup, and unlink requests in parallel for the shared directory. Users will see performance improvement for these commonly performed operations.

Acceptance Criteria

PDO patch will be accepted as properly functioning if:

- Improved parallel create / remove performance under large shared directory for narrow stripe files (0 – 4) on multi-core server (8+ cores)

- No performance regression for other metadata performance tests

- No functionality regression

The complete Solution Architecture is available at: http://wiki.whamcloud.com/display/opensfs/Parallel+Directory+Solution+Architecture

Summary of High Level Design

The design of the new htree lock requires thoughtful consideration. The design describes the new lock implementation and API and covers scenarios including:

- read directory for indexed directory.

- lookup within indexed directory.

- unlink within indexed directory.

- create within indexed directory.

The complete High Level Design is available at: http://wiki.whamcloud.com/display/opensfs/Parallel+Directory+High+Level+Design

Summary of Demonstration

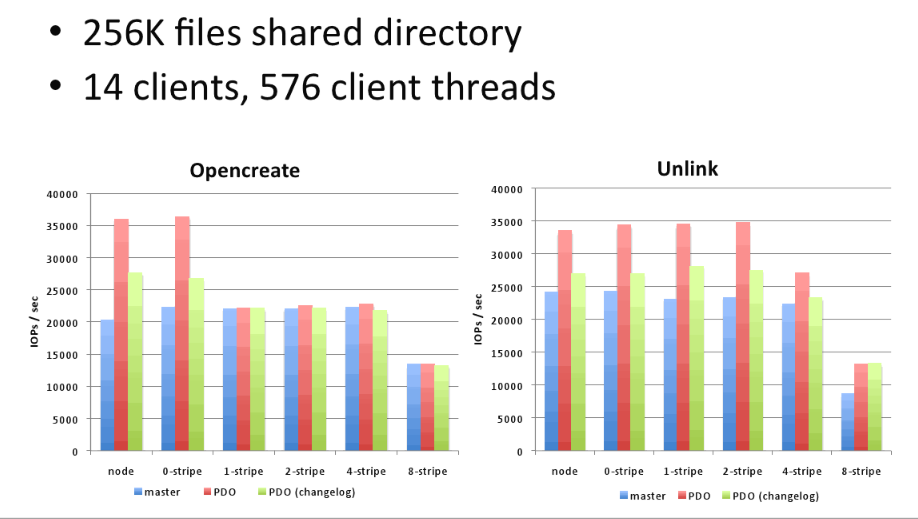

One unexpected result is visible: with a stripe count higher than 0, only a small performance increase is observed in Opencreate. After additional work, the cause of this is judged to be a performance issue in path of MDD->LOV->OSC->OST. Further investigation on this topic will be conducted during the SMP Node Affinity project.

The complete Demonstration Milestone is available at: http://wiki.whamcloud.com/display/opensfs/Demonstration+Milestone+for+Parallel+Directory+Operations

Delivery

Complete code is available at:

http://review.whamcloud.com/#change,375

Commit at which code completed Milestone review by Senior and Principal Engineer at: